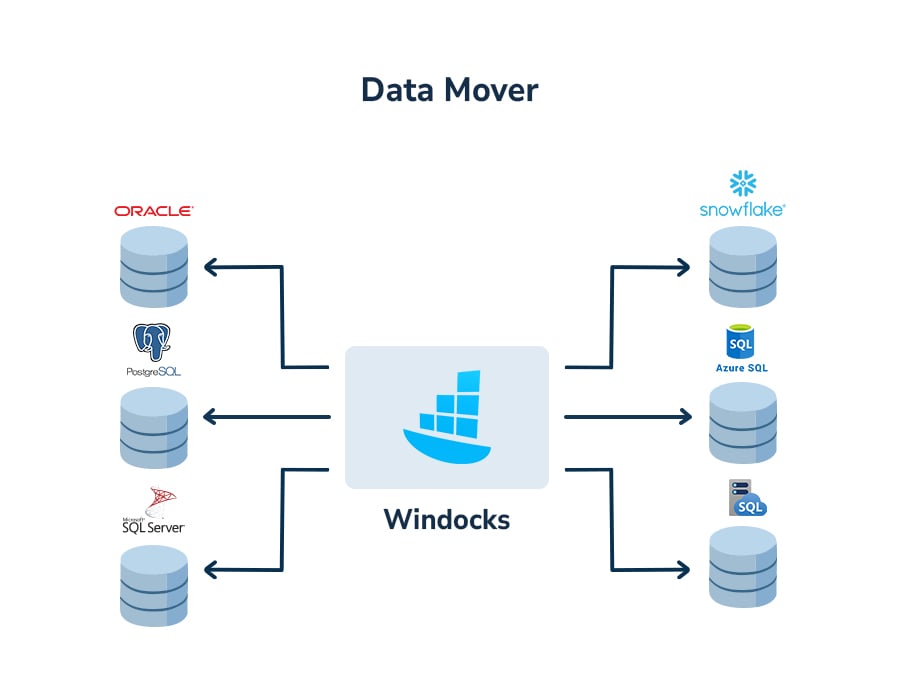

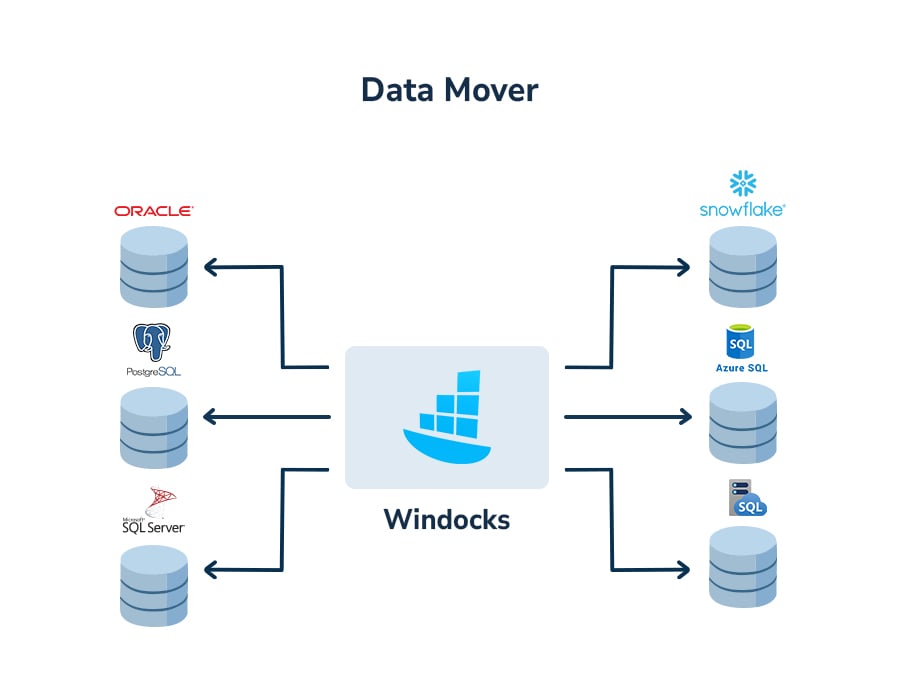

Database and Cloud Mover

Modern enterprises benefit from a growing range of database platforms and cloud services.

Increasing diversity of data platforms naturally leads to data movement, including one-time migrations and daily and near real-time flows.

Windocks data mover provides a high performance service, with automated schema and data type mapping, between SQL Server, Postgre, MySQL, Snowflake, Aurora, RDS, Azure SQL, and Azure Managed Instance.

Improving access to SQL Managed Instance and Azure SQL data

A popular use of Windocks data mover is to simplify access to Azure Managed Instance and Azure SQL data. Complete databases are easily moved to a SQL Server VM, where the databases can be cloned for support of dev/test, automated CI, or reporting and analytics.

More on Windocks Database Mover

Database moves simplified

Windocks handles the complexities of moving a full database or subset, creating the target tables, relationships, and data type mapping and writing the millions of rows of data. Simply connect to the source, define the target, and "move." Windocks data mover is fast, with up to 20 million rows per minute throughput! And, Windocks does not write to the source database.

Automated, fast subsets

Windocks subsetting handles the real-world challenges of circular data dependencies, composite keys, and other challenges, and produces the desired subset in a matter of minutes (or seconds). Connect to the source database and specify the subset size, or use Table filters with Where clauses. Source database rows can also be specified to be included.

Comprehensive data

Work with SQL Server, Postgre, MySQL, Aurora, Snowflake, Azure Managed Instances, Azure SQL, AWS RDS, flat files, Oracle, and DB2.

Automated schema drift alerts

Once defined, a subset can be automated to produce the desired subset daily, or as often as needed. Windocks detects changes in the schema automatically, alerting you to the need to review the job to handle the changes.

Getting started with the database mover

1) Install Windocks

Download the Windocks Community Edition and install, or email support@windocks.com for a supported pilot of Standard or Enterprise editions. Windocks runs on Windows, Linux, or Docker containers and Kubernetes.

2) Connect to the source and target database

Windocks supports movement between any combination of SQL Server, Postgre, MySQL, Aurora, Snowflake, RDS, Azure Managed Instance, and Azure SQL. Start by creating a persistent connection to the source and target instance or service.

3) Save and run the migration

It's really that simple. Windocks recreates the source schema on the target, handles the data type mapping, and efficiently writes the data to the new platform. It's fast and efficient even on slow networks, moving up to 20 million rows per minute.

Windocks delivers 6 powerful capabilities

Database Cloning

Create writable copies of databases in seconds instead of hours. This enables fast development, testing, and analytics, helping teams work efficiently with full, realistic environments without impacting production systems.

SQL Server containers

Run multiple isolated SQL Server instances on a single machine using Docker Windows containers. This maximizes hardware utilization, simplifies testing and deployment, and provides great support for remote teams.

.png?width=60&height=60&name=database%20(2).png)

Data masking

Protect sensitive data by replacing Personally Identifiable Information (PII) like names, phone numbers, and addresses with synthetic data that is modeled to reflect source distribution. This ensures compliance with regulatory and enterprise policies, and exposure of real customer data.

Feature Data

Code free data aggregation, cleansing, and enriching from multiple sources to create “features” for Machine Learning and AI. Feature data enables advanced analytics and ML modeling, such as predicting fraudulent transactions, improving decision-making, and driving business outcomes.

Single source of truth

Combine data from multiple databases into a unified “feature data table.” This provides accurate, consolidated information across systems, eliminates duplication, and ensures consistent reporting for analytics, compliance, and business intelligence needs.

Database subsetting

Reduce large databases to smaller, targeted datasets while maintaining all key relationships and integrity between tables. Subsetting helps accelerate testing, minimizes storage requirements, and protects sensitive information during development activities.