Code Free and Production Native Data for

Machine Learning & Analytics

Database and table level transforms to source data, with data cleansing, normalization, rich statistics, and features.

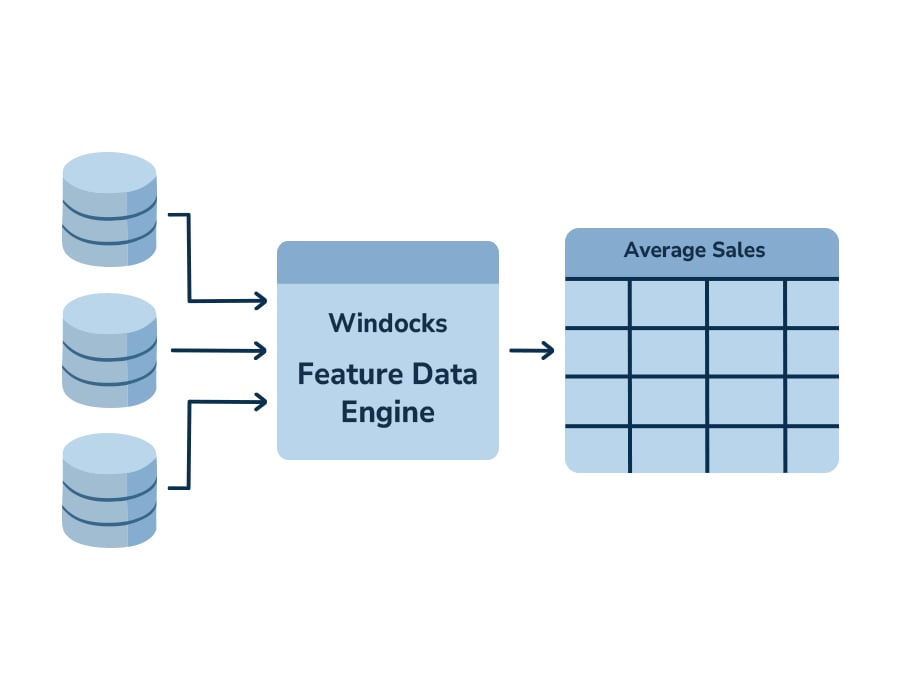

Windocks offers a new approach to feature data:

- Production native. The Windocks feature data pipeline is a repeatable, high performance solution that delivers feature data natively on the source data platform (Oracle, SQL Server, Snowflake, etc.). Features are production ready, simplifying model retraining and A/B testing.

- Code free feature data development. The Windocks web UI simplifies data sourcing and transforms, without SQL or Python coding. Rich features can be developed by anyone in hours, compared to what typically requires days of coding.

Anyone with analytical skills can now be productive producing robust feature data. Windocks does not trade-off ease of use for dumbed down data, but goes beyond other solutions with advanced support for joining disparate data sources, enabling multiple and larger data sets, and other capabilities.

More on Windocks Feature Data

Sourcing data from databases and warehouses

Windocks simplifies sourcing tables and merging columns from related tables from complex databases. One option is to begin with subsetting the database or warehouse to reduce it's size, and focus the sampled data on the desired timeframe or by other attributes. Windocks presents the collections of related tables available, and provides a simple method to join columns from related tables, to produce a data set needed.

Cleansing, Normalization, and Statistics

Once a table is created that contains the needed source data, work can begin on cleansing and normalizing the data. Again, this is a simple process, or dropping rows with null values, converting string data to numerical columns, and other steps. At this stage aggregates are created, and rich statistical values can be computed (means, standard deviations, etc.).

Features

The previous steps may have produced desired features, but in many cases the features will be produced by operating logic on the various statistics and row values. Windocks includes simple to use formula logic to add features (similar to Excel logic).

Run the transform and edit as needed

The above steps define the feature data transform, are saved and then "run." The result is the delivery of the feature data table on the target database instance. The data can also be delivered in the form of a flat file for further analysis. Windocks transforms are easily edited, for updates and optimization. Transforms are also naturally shared as well.

Windocks delivers 6 powerful capabilities

Database Cloning

Create writable copies of databases in seconds instead of hours. This enables fast development, testing, and analytics, helping teams work efficiently with full, realistic environments without impacting production systems.

SQL Server containers

Run multiple isolated SQL Server instances on a single machine using Docker Windows containers. This maximizes hardware utilization, simplifies testing and deployment, and provides great support for remote teams.

.png?width=60&height=60&name=database%20(2).png)

Data masking

Protect sensitive data by replacing Personally Identifiable Information (PII) like names, phone numbers, and addresses with synthetic data that is modeled to reflect source distribution. This ensures compliance with regulatory and enterprise policies, and exposure of real customer data.

Feature Data

Code free data aggregation, cleansing, and enriching from multiple sources to create “features” for Machine Learning and AI. Feature data enables advanced analytics and ML modeling, such as predicting fraudulent transactions, improving decision-making, and driving business outcomes.

Single source of truth

Combine data from multiple databases into a unified “feature data table.” This provides accurate, consolidated information across systems, eliminates duplication, and ensures consistent reporting for analytics, compliance, and business intelligence needs.

Database subsetting

Reduce large databases to smaller, targeted datasets while maintaining all key relationships and integrity between tables. Subsetting helps accelerate testing, minimizes storage requirements, and protects sensitive information during development activities.