LLM plan management

Applying LLMs to deliver answers to business questions requires LLM generated plans that are documented, easily explained and validated, and ready for execution.

AI transparency and explainability

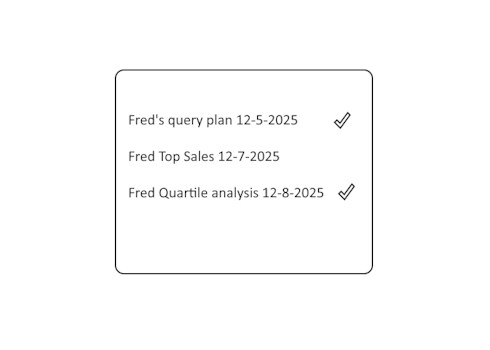

LangGrant delivers transparency and explainable results. LLM plans are documented and saved, for human review and validation.

Documented LLM generated plans that are transparent and explainable, help ensure quality needed for trust within the enterprise, and compliance to enterprise and regulatory requirements and risk management.

Lifecycle management and data delivery

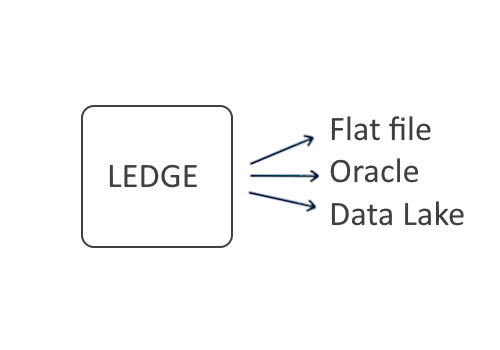

Each generated plan is attached to a query, saved, and can be executed to deliver data. When needed, plans become part of a daily or real-time production workflow, with data delivered as a flat file, a database table, or to a data lake.

Explore more capabilities

Orchestration: automated database context

LEDGE automatically delivers complete database context for LLMs to comprehend multiple databases simultaneously at scale. Like a skilled engineer, once an LLM understands databases it can contribute to solution design.

Orchestration: analytic plan

LEDGE binds LLMs to deliver accurate analytic plans for user queries. Plans are saved, easily validated and modified, and run to deliver analytics data within minutes of the user query.

Governance

PII safeguards, authorization controls, data residency rules, firewall restrictions, and token-governance policies are built-in by design. No sensitive data leaves governed systems.

Plan management

LLM generated plans are saved, easily reviewed and validated, modified, and executed, for LLM use that is transparent, explainable, and repeatable.

Database cloning and containers

On demand database clones with containers provide Agent developers with production database copies (with optional masking) for agentic AI dev/test.

Database subsetting and synthetic data

Database subsetting with synthetic data provides added context for working with complex multi-database environments.