Synthetic Data

Windocks generates synthetic data that reflects the distribution of the source, for full databases as well as flat files. Synthetic data can also be applied to masking PII data in databases.

Synthetic data can be applied to complete databases and built into clonable images, ensuring that database clones reflect security policies and are delivered safe-for-use.

Synthetic data that models a source database is preferred for data privacy, where often masked or obfuscation of data is subject to linkage attacks on secondary identifiers. Synthetic data is also preferred for ML and AI initiatives, as modeled synthetic data enhances data accuracy and utility.

Windocks synthetic data also supports cross platform movement. For example, synthetic data applied to a SQL Server database can be moved to Snowflake. Data movement includes any combination of SQL Server, Postgre, MySQL, Snowflake, Azure MI and Azure SQL, Aurora, AWS RDS, and Oracle.

Schema changes are detected automatically, to ensure delivered databases reflect any changes in source schema.

DevOps with Subset and Synthetic Data

Delivering data for a repeatable, agile process for dev/test is challenging due to lengthy production database restores, and concerns for personally identifiable information (PII).

With Windocks data scientists, developers, and test teams work can work with right-sized data sets, ranging from writable production database images to database subsets and tables, optionally populated with synthetic data.

Windocks automates the creation of a high fidelity subset and synthetic data:

- Simply provide a percentage size of source database, and Windocks delivers a subsetted database in minutes.

- Model the source data distribution using built-in synthetic data generation from Windocks, or open source or in-house libraries.

- Validate the data distribution and privacy with built-in graphical distributions and metrics, or your custom code

- Deploy synthetic data sets to target database instances or Docker containers for immediate access to dev and test teams and reproducing experiments

Masking with Synthetic Data - video

Steps to orchestrate synthetic data generation

1. Install Windocks

After you have received your download link and license key, install Windocks Synthetic on a Windows or Linux machine, or Docker container (See RESOURCES / Get started)

2. Connect to the source database and create a data source

Provide credentials to connect to a source database. Windocks connects to the source database. Select your database from the connection and create a data source.

3. Define a transform and run it

- Choose to deliver either a subset database, or synthetic, or both, and assign appropriate database names.

- Optionally, deliver synthetic data to a different database type (Such as SQL Server to Snowflake)

- Specify Filters / WHERE clauses on the source to get the subset and synthetic data you want. You may also select specific rows you want in the subset / synthetic target

- Specify the size as a percentage of the source, save, and "run" the transform.

- Windocks delivers the subset and synthetic populated databases in minutes, while maintaining the production relationships and data distribution.

- Review the transform report and also the quality of the subset and synthetic databases with built-in distribution charts.

This workflow can be automated using REST APIs.

Windocks delivers 6 powerful capabilities

Database Cloning

Create writable copies of databases in seconds instead of hours. This enables fast development, testing, and analytics, helping teams work efficiently with full, realistic environments without impacting production systems.

SQL Server containers

Run multiple isolated SQL Server instances on a single machine using Docker Windows containers. This maximizes hardware utilization, simplifies testing and deployment, and provides great support for remote teams.

.png?width=60&height=60&name=database%20(2).png)

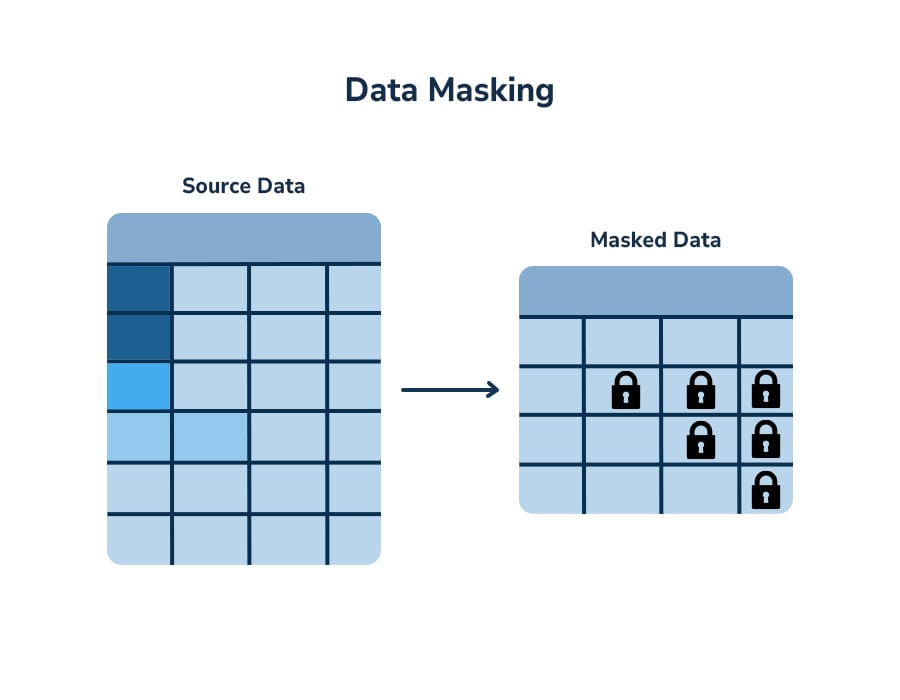

Data masking

Protect sensitive data by replacing Personally Identifiable Information (PII) like names, phone numbers, and addresses with synthetic data that is modeled to reflect source distribution. This ensures compliance with regulatory and enterprise policies, and exposure of real customer data.

Feature Data

Code free data aggregation, cleansing, and enriching from multiple sources to create “features” for Machine Learning and AI. Feature data enables advanced analytics and ML modeling, such as predicting fraudulent transactions, improving decision-making, and driving business outcomes.

Single source of truth

Combine data from multiple databases into a unified “feature data table.” This provides accurate, consolidated information across systems, eliminates duplication, and ensures consistent reporting for analytics, compliance, and business intelligence needs.

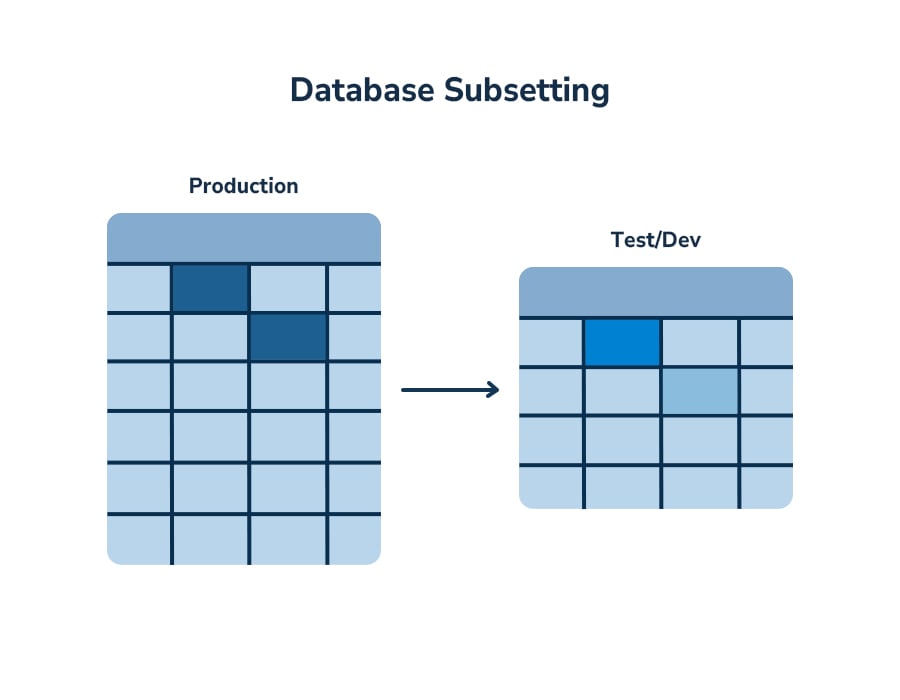

Database subsetting

Reduce large databases to smaller, targeted datasets while maintaining all key relationships and integrity between tables. Subsetting helps accelerate testing, minimizes storage requirements, and protects sensitive information during development activities.